If I can't measure it I can't (or struggle to) design it

TL;DR

I always aim to drive product design work with a tangible and clear objective from the very beginning.

Learning the hard way

Early in my career as a UI designer I was tasked with taking the insights from a user survey and exploring some design recommendations for a product I was working on in order to improve the overall user experience.

I excitedly saw a world of opportunity and took the initiative (or so I thought) to essentially re-design the entire journey, along with a brand new UI, flashy hover states and updated content. All of this was completely self directed without any user input or validation that these ideas would have any impact.

Somehow stakeholders were convinced they should buy into this new design and there began the long and expensive task of implementing these designs and essentially re-building the entire front end experience of the product.

Reflecting on this now I realise I had started this work with unclear goals and had not held myself accountable to specific measurable objectives. I had no understanding of the wider strategic goals of the product and why this design was particularly important. Instead I had taken this as an opportunity to have free reign on the product and re-design everything without a clear rationale. This no doubt cost the organisation unnecessary time and money developing a solution where it was unclear whether any of these new changes would have any material impact.

These days I’m always very focussed on ensuring there is a clear and tangible goal that the design work I am producing is attached to. It is difficult to strategise and plan without success measures in place. If I can’t tangibly measure the impact something is having, I struggle to design it in the first place.

I’m also now very wary of projects which come along that are solutions disguised as problems. For example I was working on an app where I was asked to ‘design a notification inbox’. However no one seemed very clear on what the objective of this was, how success would be measured by implementing it or even where it appeared on the product roadmap. Having clear goals reduces unnecessary waste in the design process and ensures I’m not designing something that isn’t tied to a strategic objective.

How I ensure work is driven by clear objectives:

When working with stakeholders I always advocate for starting with the problem to solve rather than the perceived solution.

I work closely with Product Managers to ensure the product objectives and success metrics are clear. The most successful relationships I’ve had is where I am actively inputting into those discussions and we are agreeing on the OKRs together.

Illustration source: popsy.co

Designing the design process at Cover Genius

TL;DR

I developed a new design process at Cover Genius by conducting internal research and providing a process toolkit for the team to use.

Overview

When I joined Cover Genius I was given the opportunity to optimise and formalise the product design and content process. The objective was to develop a new process which can be scaled across all product teams.

Goals of the new process:

Deliver value into the products faster by reducing steps in the product development process

Mitigate the risk of misaligned expectations by involving stakeholders at every step

Remove silos and work as one team using the same software tools

Methodology

Listen - team interviews

The first step was to conduct a listening tour with team members involved. I conducted 10 one to one interviews with designers, product managers, content managers and engineers. This research aimed to understand some of the key pain points and opportunity areas for those involved in the product design process.

Research objective:

- Understand the key friction points in the current product design process - Understand what the key steps in the process are and who is involved in each step Identify opportunity areas for improvement

Audit - create a journey map of the current process

Using the insights from the team interviews, I created a current state process map. The goal of this was to understand the current workflow that team members go through within the design process.

The pain points and opportunities for improvement were then attached to each stage of the journey. From here it was clear where the key points of friction were in the journey that needed to be addressed first.

Journey map of the current design process

There were three areas for improvement that were prioritised to be tacked as a first step:

Feedback loops

Project kick-offs

Content and design collaboration

It was also important at this stage to understand what was working well and the positive areas of the process which could be built upon.

Workshop - improving feedback loops

In order to improve the feedback process I facilitated a cross functional workshop with design and product. As a group we reviewed all the identified pain points within the feedback process and voted on the areas which were driving the biggest friction.

As a group we then took these opportunity areas and ideated on possible solutions. We gathered a wide range of solutions then did another round of voting to define which solutions we thought would be most impactful.

These ideas were then carried over into the improved design process development. In particular:

Involve product, design and content at the beginning of the ideation process

Define a different content approval process

Develop a more formalised feedback ceremonies that are documented and managed by a specific person

Workshop - improving kick-offs

Based on the previous research it was clear that the kick-off process was sometimes fragmented with the project team briefed in at different times in the workflow. A key opportunity for the new process was to streamline the kick-off and start creating value more quickly. The purpose of the workshop was to define how we would create documentation around kicking off a piece of work and what a kick off ceremony looks like.

Ideating on how to structure project kick-offs

As a group we ideated on the information that a kick-off document would need to include. A draft doc was then created and shared for feedback before being turned into a template. We also did an activity where we defined the roles and responsibilities within that process.

Workshop - roles and responsibilities

Another important workshop that took place was defining the different roles and responsibilities within the design process. I used a method from the Atlassian playboook and got the team got together and declared what they believed each other’s responsibilities in the process were.

Here we were able to identify overlaps and misaligned expectations.

Outcome of the roles and responsibilities workshop

Developing the content process

The content review and delivery workflow was another point of friction in the end to end process. By auditing the current experience it was clear there were operational inefficiencies and redundant repetition in the use of software.

By identifying a new software toolkit (Ditto) we were able to present a new process and pitch for the new tool to be integrated into our process.

Crafting a new end to end product design and content process

The underlying principle of crafting a new process is that it is built on a strong foundation of both tools and mindsets. Before diving into the process steps it was important to identify the mindsets we wanted the team to adopt when approaching product design and going through the steps in the process. These three mindsets led the thinking for the specific steps in the journey:

The process steps themselves were iterated on and adjusted based on peer feedback and the integration of other processes such as the content review process

The process steps, tools and frameworks were then presented back to the wider team for buy-in on the approach.

Outcome

I documented the new process steps in the format of slides which could be easily shared and referenced at any point within the design process. Each step included information on who’s involved, what happens and specific tools + resources.

The design process steps documentation

I also created a process toolkit in Confluence. This is where teams can find resources and templates to help with each stage of the process along with an overview of the design process steps.

The design process toolkit

What’s next?

Onboard teams to the process through shared resources and presentations

Ensuring all team members have access to the toolkit

Testing and iterating on the process with live projects

Illustration source: popsy.co

Why I created a learning community at ClearScore

TL;DR

I created a community of learning within the design team, where every week junior designers would get together and learn something new about working in an agile product organisation.

Identifying the opportunity

From 2021 to 2022 I worked as a Senior Product Designer at ClearScore, a credit score and marketplace app based in the UK. I led a small design team including a junior product designer and a content designer within the marketplace squad.

While working on the product, I noticed there were gaps in the team's knowledge about the entire product development process. This made me reflect on my own experience of learning about product design, where the focus was mainly on UX and UI craft skills. There was less emphasis on the skills and understanding required to collaborate effectively with other teams involved in building the software. This led me to hypothesise that other designers may be facing similar challenges, being placed in engineering teams without a full understanding of the overall working environment.

Goal

I set myself the personal objective of:

How might I create a culture of learning within the design team?

To begin with, I wanted to understand if other designers from different squads were interested. I contacted the wider community of junior designers (a total of 7), initially through an anonymous survey and later through a collaborative workshop. Not only did I find that they were eager to learn more about product development, but I also discovered that they needed a forum to discuss problems within their teams and the design process as a whole. This presented an opportunity not only to establish a community for sharing knowledge but also to create a safe space where the group could gather and have open conversations.

Curating learning content

Once I understood the preferred learning style and schedule of the team, I chose to organise a series of weekly sessions for the learning group. Each session was carefully designed to concentrate on a specific aspect of working as a designer in an agile organisation.

To curate these sessions, I reached out to my contacts throughout the company, and thankfully, they were willing to contribute. Subsequently, I developed a program where each week a different representative would present. This included engineers, product managers, agile delivery managers and product marketers.

Outcome

Before I left ClearScore the learning group took part in 10 sessions in total covering such topics as:

What is the ClearScore product development cycle?

How do I work with a product manager?

What is agile?

How do I work with an engineer?

What is our go to market strategy?

What are our design principles?

What is service design?

How do I craft a case study?

My key takeaways for future teams I work on:

Identify knowledge gaps

It is important to audit and understand the team’s knowledge gaps before diving into the product development process. Understand which areas the team wants to learn more about and where they have the most confidence.

Leverage network connections

Curating the learning sessions would not have been possible with input from my colleagues who could bring their expertise. As a result of fostering a diverse network from around the business I was able to draw on these connections to build out a robust learning program.

Ensure there is a safe space for discussion

This exercise also unearthed the need for a forum in which designers felt that they could raise concerns and questions without fear of judgement. This is important not just for the design process but also the wellness of the team.

Illustration source: popsy.co

How we’re using continuous discovery to become an insights driven squad

This post originally appeared on the ClearScore Design blog

TL;DR

Our squad gets together every week (yes, including engineers) to talk with a ClearScore user.

What exactly is continuous discovery?

The framework we are using was created by Teresa Torres, a product discovery coach. She has written a whole book on the end to end methodology and how to implement the process in your team.

A working definition of continuous discovery:

Regular touchpoints with customers

By the team building the product

Where they conduct small research activities

In pursuit of a desired outcome

Let’s break that down and explain how it works for our squad:

Regular touchpoints with customers

Every week we schedule a remote interview with a ClearScore user. They are invited to tell us about their relationship with our product and we get to hear first hand their needs and pain points surrounding their experience.

By the team building the product

It’s really important for us that the whole squad is given the opportunity to hear first hand what our users have to say. Each week, the interview is attended by product designers, content designers, product managers, engineers and test engineers from front end, back end and native applications.

We try to follow a mantra used by Government Digital Service: research is a team sport. Every member of the squad has the voice of the user guiding them, not just relying on one or two members of the team to educate the squad. Not only is the whole squad listening but they also input into the documentation and agreed needs and pain points that we have excavated from the interview.

Where they conduct small research activities

Each interview is 1 hour each week, which is a small commitment in our busy calendars. We can use that time however we like — to get feedback on the live product, test prototypes or simply hear a story about their experience of applying for credit products. Over time we have adapted our interview guide to focus on the specific areas of interest we think will give us the most value.

In pursuit of a desired outcome

As with any good product framework, continuous discovery is anchored by a specific objective and measurable outcome. As our squad is part of the Marketplace function our outcomes are commercially driven but always with a user-centred focus. It is up to us to figure out what is causing friction within the conversion funnel and to help users select the right products for them.

So what are the benefits of this framework compared to traditional user research methods?

In a traditional user research framework a bulk of research might be done up front to guide the project for weeks, if not months. That could mean it is not always possible to revisit or validate insights that have been identified or speak further with users as the work develops.

A more traditional research approach which has specific milestones with larger discovery outputs at each stage

A more traditional research approach which has specific milestones with larger discovery outputs at each stage

With continuous research we can adapt and change our questions over time as we learn more about our user’s behaviours. It also means we are always less than a week away from speaking to a user if we need some quick feedback.

Continuous discovery means small and iterative research studies each week

Continuous discovery means small and iterative research studies each week

What do we actually do every week?

Our sessions are remote or hybrid so we all jump on a zoom call and the facilitator leads the session. We use a trusty interview guide to excavate the specific insights that we want to gather in a specific week.

The squad getting together to watch a weekly interview

The squad getting together to watch a weekly interview

Our interview is usually split into two sections:

Credit product story

App walkthrough

The credit product story starts with a simple question: Please tell us about the last time you applied for a credit product, such as a loan or credit card. Then we go deep into the details. We want to know the full end to end experience of what the user went through. Sometimes they talk about using ClearScore, sometimes they don’t talk about our app at all.

This gives us full context of the journey before a ClearScore user even picks up a device. What lead them to needing that loan? What was the reason they started thinking about it? Where were they when they started applying? What time of day was it?

This question also centres the person in the specific story. By relating to a lived experience it means people are less likely to generalise about their behaviour or even worse speculate about other people’s behaviour — we’re not interested in that.

We find there is a lot more truth and rich insights in asking about things that users have already done rather than things they say they do.

The app walkthrough is where we ask a user to open their ClearScore app. Based on the story they have just told us, we ask them to show us how they would complete this task on the ClearScore app. Here we get to know about their mindset as they go through different stages of the app flow. We get feedback on usability, content, and comprehension. There is a lot of rich insight from simply getting a user to talk through their experience of looking for a card or loan on ClearScore.

After the session the squad has a debrief and agrees on the specific needs, pain points and opportunities that have been identified as part of the interview. This is then added to our opportunity solution tree.

The opportunity solution tree is a framework, created by Teresa Torres, which allows us to visualise a problem space quickly and easily. At the top of the tree is the specific outcome that we are trying to achieve. Under that we group different opportunities that we have heard from users into common themes and child opportunities

When it comes to testing solutions this tree framework allows us to see the full opportunity space of the product we are working on. It’s also a useful artefact for onboarding people to the team so they can understand all the discovery we have done.

We also create artefacts that allow other teams to see the insights we have gathered. We create a research snapshot of every interview which is a visual overview of the customer we spoke to and the main things we learned.

After each interview we create a snapshot of what we learned which can be shared with other teams

Here are three ways we keep our practice successful:

Distributed responsibility

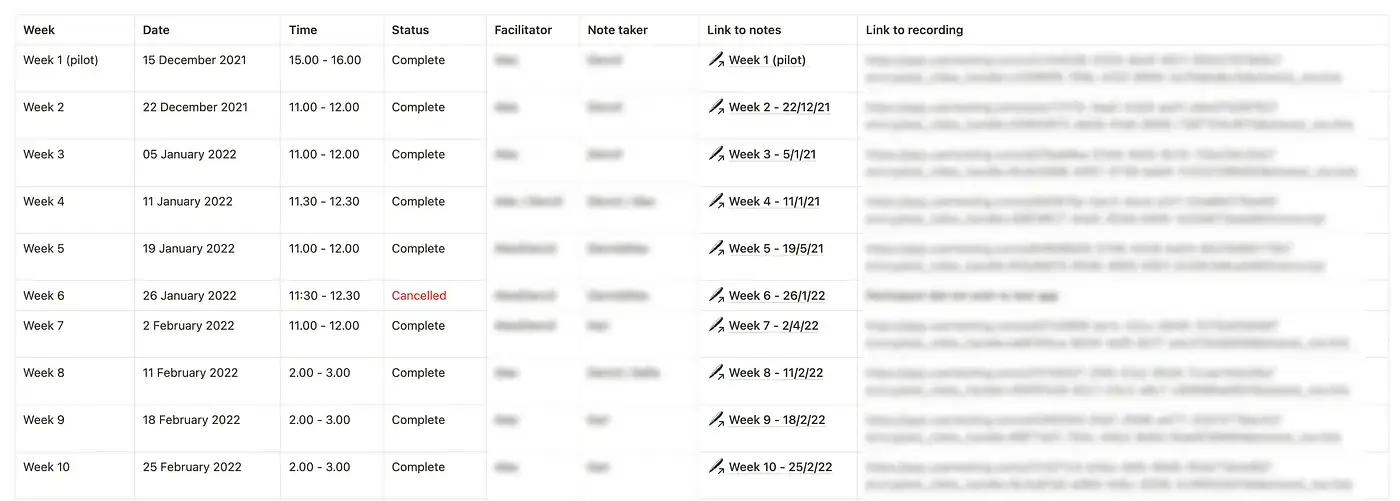

Operationalised logistics is important so that there are no bottlenecks in the process relying on one person. That means the team takes it in turns to facilitate, note take and set up the interviews themselves. We utilise tools Notion, Miro and usertesting.com to streamline recruitment and documentation.

Logistics and operations are crucial to keeping the process running smoothly each week

Logistics and operations are crucial to keeping the process running smoothly each week

Attendance accountability

As mentioned, we believe research is a team sport and our goal this year is to become an insights driven squad. That means keeping everyone accountable for their own research exposure hours. In order to do, this we have identified user research attendance as one of our product OKRs which is tracked the same as any of our other product specific metrics. We have a goal of 80% of the squad attending at least 3 interviews in the first quarter of the year.

Research attendance is tracked and contributes towards our product OKRs for the quarter

Measuring success

We’re not just doing research for the sake of research. We make sure the work we are doing always ladders back up to the product objectives we are trying to solve for. We do this by balancing the needs of our users with the goals of our product and identifying the most valuable opportunities from there.

The biggest indicator of success from this process has been hearing an engineer say ‘remember that user who said X? well how about we try Y’

This was when we knew the squad was becoming fully engaged and starting to look at problems from the lens of the customer.

It’s been great to see not only are we achieving our research participation goals but also seeing squad members coming back for even more than that. This demonstrates they are not only attending because they have to but because they have a genuine interest in our user research.

Recommended Reading: Continuous Discovery Habits by Teresa Torres

Illustration source: popsy.co